In a continued effort to strengthen Instagram privacy and safety, Meta has rolled out a series of new features aimed at protecting teenage users from harmful interactions and raising awareness about its reporting and blocking tools.

These updates build on Meta’s broader commitment to fostering a safer digital environment, especially for vulnerable users like teens, who are more likely to encounter unwanted or predatory behavior online.

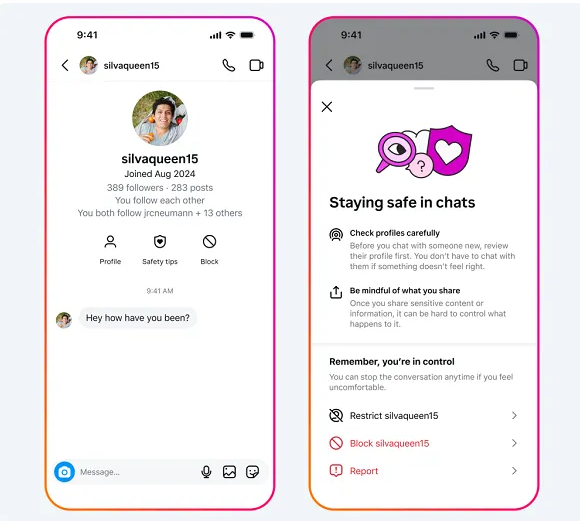

New “Safety Tips” Prompts in DMs

The first major update is the introduction of in-chat “Safety Tips” prompts that offer real-time guidance for teen users. These alerts link directly to helpful resources on identifying potential scams, staying safe when interacting with strangers, and recognizing red flags during conversations. This is especially useful when dealing with things like vanish mode on Instagram or unexpected DMs.

This feature ensures that young users are not only warned but also empowered with immediate tools and education when it matters most, right within their private messages.

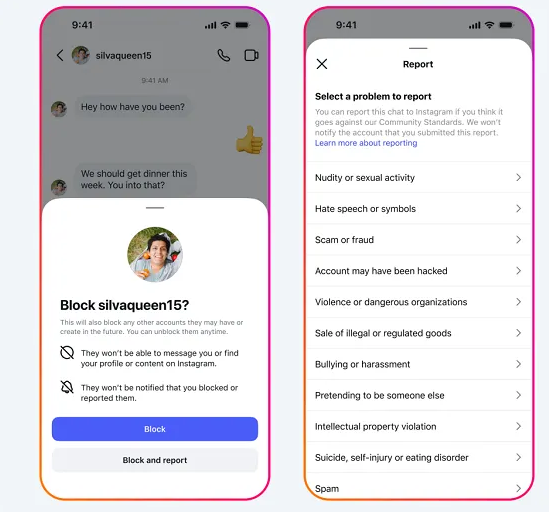

Easier Blocking and Reporting in One Tap

To simplify user protection, Meta has also launched a combined “Block & Report” option in Instagram DMs. Previously, users had to complete two separate actions to block and report an account. Now, they can do both in a single step.

🗨️ Meta explains:

“While we’ve always encouraged people to both block and report, this new combined option will make this process easier, and help make sure potentially violating accounts are reported to us, so we can review and take action.”

The goal is to streamline the process and ensure potentially dangerous users don’t slip through the cracks. It’s similar to what happens when users restrict someone on Instagram, allowing more control over who interacts with them.

Account Creation Info & One-Tap Blocking

Instagram now also displays account creation details, giving teens more context about who they’re chatting with. Combined with easy one-tap blocking, these updates make it easier for teens to identify and avoid suspicious or newly created accounts often linked to spam or abuse.

Adult-Managed Teen Accounts Under Stricter Review

Meta is also expanding safeguards around adult-managed teen accounts, which are sometimes exploited for predatory purposes. These types of accounts — where adults create and manage profiles for children under 13 — are receiving increased scrutiny.

In fact, earlier this year, Meta’s moderation teams removed:

- Nearly 135,000 Instagram accounts for leaving sexualized comments or requesting explicit content from accounts featuring children.

- An additional 500,000 related accounts across Facebook and Instagram were also taken down for violating safety policies.

This crackdown highlights the growing concern over such misuse and the importance of platform accountability in protecting minors. For users wondering about account behavior, it’s helpful to check if someone blocked you on Instagram.

Teens Removed from Suspicious Recommendations

In addition to these updates, teen accounts will no longer appear in recommendations to potentially suspicious adults — another move aimed at limiting exposure to bad actors. By reducing discoverability, Meta is trying to break the cycle before any harmful interaction can begin.

Impact of Current Protections

Meta’s previous protections are already showing promising results:

- The Location Notice (rolled out in June) was shown to teens over 1 million times, with 10% tapping to learn more about privacy settings.

- Since the launch of nudity protection in DMs, 99% of users (including teens) have kept the feature enabled.

- Over 40% of blurred images received via DMs remained blurred, significantly cutting down on unwanted nudity exposure.

These figures reinforce the importance of proactive safety features and user education in minimizing online risks.

🇪🇺 Meta Supports Raising the Digital Age Limit

Meta is also backing global efforts to raise the minimum age for social media access. Several EU nations are considering a “Digital Majority Age”, with proposals to restrict platform access to users aged 15 or older, and potentially 16, following further consultation.

By publicly supporting this move, Meta is aligning itself with growing regulatory pressure, likely as both a moral stance and a strategic step to stay ahead of legal reforms. In a related update, Meta also clarified it would not restrict post reach for using “Link in Bio”, calming concerns around visibility.

The Bottom Line

Meta’s latest updates on Instagram represent meaningful progress in making the platform safer for teens. With new tools, easier reporting, and more transparency, young users are gaining more control, visibility, and support in navigating online interactions.

But as always, digital safety is a shared responsibility between platforms, parents, and users themselves.

FAQs About Meta’s New Teen Protection Features on Instagram

1. What is the new “Safety Tips” feature in Instagram DMs?

It’s an in-chat prompt that provides teens with quick access to safety advice, including how to identify scams and deal with strangers on the platform.

2. How does the new “Block & Report” option help?

Previously, blocking and reporting were separate actions. The new feature combines both, streamlining the process and ensuring that suspicious accounts are flagged faster.

3. What does “account creation info” do?

Teen users can now see when an account was created, giving them better context about new or suspicious profiles they might encounter.

4. Why is Meta targeting adult-managed teen accounts?

These accounts have been abused in the past by adults to contact minors. Meta has removed hundreds of thousands of such accounts to better protect young users from exploitation.

5. What is the Digital Majority Age, and why does Meta support it?

It’s a proposed regulation in the EU to restrict social media use to users aged 15 or older. Meta supports it as part of its broader commitment to user safety, and is likely to stay ahead of legal requirements.